In 2019, word filtered out that a quantum computer built by Google had performed calculations that the company claimed would be effectively impossible to replicate on supercomputing hardware. That turned out to not be entirely correct, since Google had neglected to consider the storage available to supercomputers; if that were included, the quantum computer's lead shrank to just a matter of days.

Adding just a handful of additional qubits, however, would re-establish the quantum computer's vast lead. Recently, however, a draft manuscript was placed on the arXiv that points out a critical fact: Google's claims relied on comparisons to a very specific approach to performing the calculation on standard computing hardware. There are other ways to perform the calculation, and the paper suggests one of those would allow a supercomputer to actually pull ahead of its quantum competitor.

More than one road to random

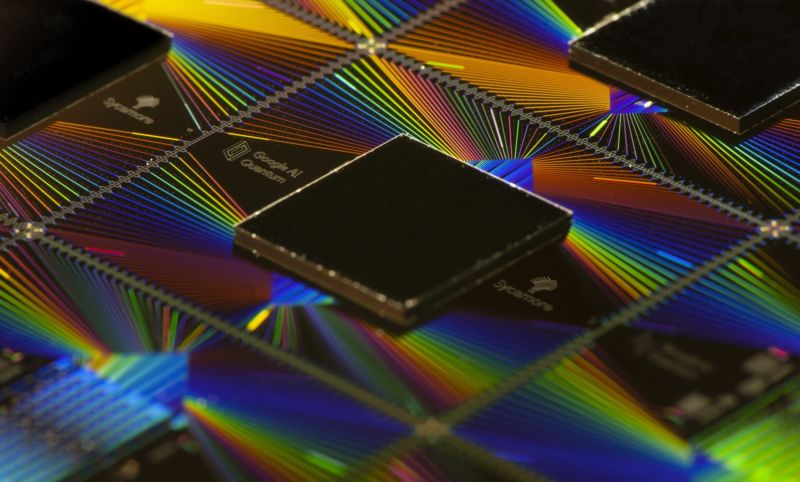

The calculation Google performed was specifically designed to be difficult to simulate on a normal computer. It set the 54 qubits of its Sycamore processor in a random state, then let quantum interference among neighboring qubits influence how the system evolves over time. After a short interval, the hardware started repeatedly measuring the state of the qubits. Each individual measurement produced a string of random bits, making Sycamore into a very expensive random-number generator. But if enough measurements are made, certain patterns generated by the quantum interference become apparent.

Because the rules of this quantum interference are understood, it's also possible to calculate the patterns we should see in the random numbers generated by Sycamore. But doing those calculations is very computationally expensive and gets more expensive with each additional qubit in the system. Google estimated that it would take an unrealistically long time to perform these calculations on the world's most advanced supercomputers.

The one flaw with this argument that was pointed out early in the process was that Google neglected to consider the storage attached to the world's then-largest supercomputer, which would significantly cut into Sycamore's lead. But the reality remained that these computations were very difficult for classical computing hardware.

The new manuscript focuses on a key aspect of that phrase: these computations. Google chose a very specific method of computing the expected behavior of its processor, but there are other ways of doing equivalent computations. Over the intervening time, a few options have been explored that do perform better. Now, Feng Pan, Keyang Chen, and Pan Zhang are describing a specific method that allows a GPU-based cluster to produce an equivalent output in only 15 hours. Run it on a leading supercomputer, and they estimate that it would outperform the Sycamore quantum processor.

Some light-on-math descriptions

There are several ways to look at what Pan, Chen, and Zhang accomplished. We'll try three of them, getting progressively deeper into the math as we go along.

The simplest way of viewing this is in terms of the output that Sycamore provided. Individual measurements of the state of the qubits in the Sycamore processor produced a string of truly random ones and zeros, but patterns would become apparent if you ran enough measurements of a single initial state of the processor. If you set up a classical calculation that recapitulates the physics of Sycamore, you would get the same level of randomness and the same patterns.

What the new paper describes is a way to trade off some of the fidelity of the calculated reconstruction of the processor's behavior but gain much faster calculations in the process. In other words, the new calculations don't exactly recapitulate the behavior of Sycamore, but they still produce the patterns and the underlying randomness, and they can be completed much faster.

That's explanation one. Option No. 2 for understanding this involves considering how the starting state of the Sycamore processor evolves into its state at the point of measurement. Multiple possible paths will get there and, this being a quantum system, it explores all of them. To get an exact model of the Sycamore processor's output, you'd also have to explore all the paths, which is very computationally intensive. Pan, Chen, and Zhang found a means of limiting the paths you look at that makes the calculations tractable while still achieving an equivalent output.

Those of you who want to avoid math should now skip to the next section header. The actual calculation method describes the interactions of Sycamore's qubits as a three-dimensional tensor network, with the tensors dictating the relationships among the properties of the qubits. The algorithm then simplifies this down by severing some of the network's connections—the researchers describe this as doing the equivalent of drilling three-dimensional holes through the network.

Each hole you drill cuts the fidelity of its calculations in half. But that makes the fidelity completely adjustable: you can ensure you have a sufficient recapitulation of Sycamore's behavior simply by limiting the number of holes you drill. The mathematics of where those holes were drilled within the network were dictated by the physical structure of the Sycamore chip itself.

The resulting contracted tensor network was significantly easier to model, although the researchers had to split it into subtasks that could be stored on the system they were working on. And they used their algorithm to model the behavior of smaller qubit networks on Sycamore and showed that it produced results that were accurate within their fidelity limit.

reader comments

75