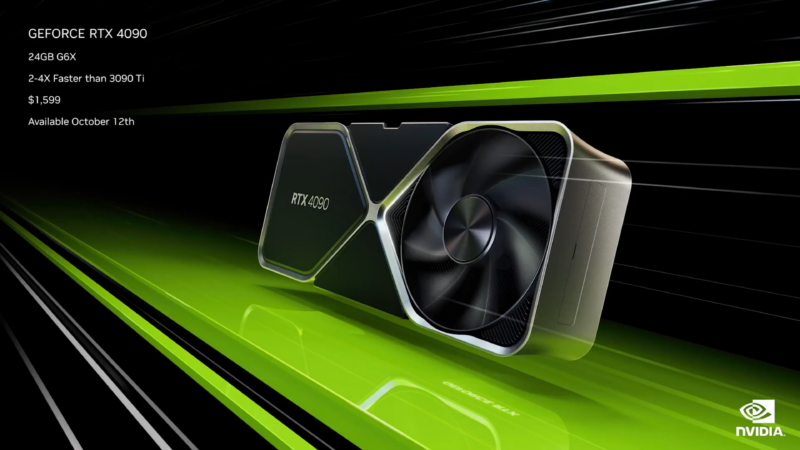

After weeks of teases, Nvidia's newest computer graphics cards, the "Ada Lovelace" generation of RTX 4000 GPUs, are here. Nvidia CEO Jensen Huang debuted two new models on Tuesday: the RTX 4090, which will start at a whopping $1,599, and the RTX 4080, which will launch in two configurations.

The pricier card, slated to launch on October 12, occupies the same highest-end category as Nvidia's 2020 megaton RTX 3090 (previously designated by the company as its "Titan" product). The 4090's increase in physical size will demand three slots on your PC build of choice. The specs are indicative of a highest-end GPU: 16,384 CUDA cores (up from the 3090's 10,496 CUDA cores) and 2.52 GHz of boost clock (up from 1.695 GHz on the 3090). Despite the improvements, the card still performs within the same 450 W power envelope as the 3090. Its RAM allocation will remain at 24GB of GDDR6X memory.

This jump in performance is fueled in part by Nvidia's long-rumored jump to TSMC's "4N" process, which is a new generation of 5 nm chips that provides a massive efficiency jump from the previous Ampere generation's 8 nm process.

Meanwhile, the RTX 4080 will follow in November in two SKUs: a 12GB GDDR6X model (192-bit bus) starting at $899, plus a 16GB GDDR6X model (256-bit bus) starting at $1,199. Based on how different the specs are between these two models, however, Nvidia appears to be launching two entirely different chipsets under the same "4080" banner; traditionally, this much Nvidia hardware differentiation has come with separate model names (i.e. last generation's 3070 and 3080). We're awaiting firmer confirmation from Nvidia on whether the 4080 models do or do not share a chipset.

The pricier of these two will include more CUDA cores (9,728, up from the RTX 3080's 8,704), boost clock (2.51 GHz, up from 3080's 1.71 GHz), and power draw (320 W, same as the 3080 but more than the lower-memory 4080's 285 W). At least for this generation, Nvidia is offering 12GB of memory as a 4080 baseline—effectively addressing a major criticism with the consistently memory-starved RTX 3000 generation of GPUs.

Micromap, micromesh

Both new models include iterative updates to Nvidia's proprietary RTX chipset elements—RT cores and tensor cores. Alongside these, Nvidia has announced updated processes for both its handling of real-time ray tracing in 3D graphics and its Deep Learning Super-Sampling (DLSS) upscaling system. The former will be augmented on Lovelace GPUs with two new types of hardware units: an "opacity micromap engine," meant to double raw ray-tracing performance, and a "micromesh engine" to increase the amount of geometric coverage "without storage cost" on the rendering front.

The latter has now been bumped to a new version: DLSS 3, which appears to be an RTX 4000-series exclusive feature. According to Huang, this system promises to "generate new frames [of gameplay] without involving the game, effectively boosting both CPU and GPU performance." This frame-by-frame rebuilding of graphics in real time is designed to solve the issues found in image reconstruction techniques that cannot necessarily model the motion vectors of in-game elements like particles. This new method, combined with the techniques established by prior DLSS generations, could be used to reconstruct a whopping 7/8 of a scene's pixels—thus dropping computational demands on both GPUs and CPUs. If it works as promised, DLSS could deliver a substantial improvement over the pixel-by-pixel process that has been so successful across both DLSS and its competitors—AMD's FSR 2.0 and Intel's upcoming XeSS.

On top of those proprietary systems, Nvidia's newest GPUs will apparently lean on a new process that Huang calls "shader execution reordering." While this will improve performance for raw rasterization, Huang's brief description of the system hinges largely on ray tracing's computationally expensive workloads. The system will drive "2-3 times" the ray-tracing performance of the company's previous Ampere generation of GPUs.

-

DLSS 3 demonstration on an unnamed RTX 4000 system.

-

DLSS 3 demonstration on an unnamed RTX 4000 system.

-

DLSS 3 demonstration on an unnamed RTX 4000 system.

As part of today's announcements, Nvidia presented a few titles with upcoming Nvidia RTX-specific optimizations, and the biggest is arguably Microsoft Flight Simulator 2020. When combined with DLSS 3's image reconstruction system, MSFS was demonstrated with frame rates easily reaching the 100s in some of the game's busiest scenes (though raw rasterization of the same scenes on an unnamed Lovelace GPU and PC showed solid performance as well, considering how power-hungry that game and its massive cityscapes can be). The above gallery is captured in 4K, so you may want to click and zoom in to see how DLSS 3 handles fine pixel details compared to an apparent combination of raw pixels and standard temporal anti-aliasing (TAA) in older systems.

-

RTX Remix, after.

-

RTX Remix, before.

Huang also tried to curry favor with the PC modding scene by demonstrating how a new Nvidia-developed toolset, dubbed RTX Remix, can be applied to a number of classic games whose modding capabilities are wide open. The best results came from a before-and-after demonstration applied to Elder Scrolls III: Morrowind, which used RTX GPU tensor cores to programmatically update the game's textures and physical material properties. (See above, or go to Nvidia's site for even more before-and-after comparisons.) Similar results are expected from a free Portal 1 DLC package that Nvidia will release for fans to apply to that PC gaming classic in November. We'll be curious to see head-to-head showdowns of Nvidia's automatic modding results compared to the best stuff from over a decade of community development.

Ars Technica may earn compensation for sales from links on this post through affiliate programs.

reader comments

301