Nvidia's New Chips Power AI, Autonomous Vehicles, Metaverse Tools

The chip design giant's H100 GPU pays homage to computer science pioneer Grace Hopper.

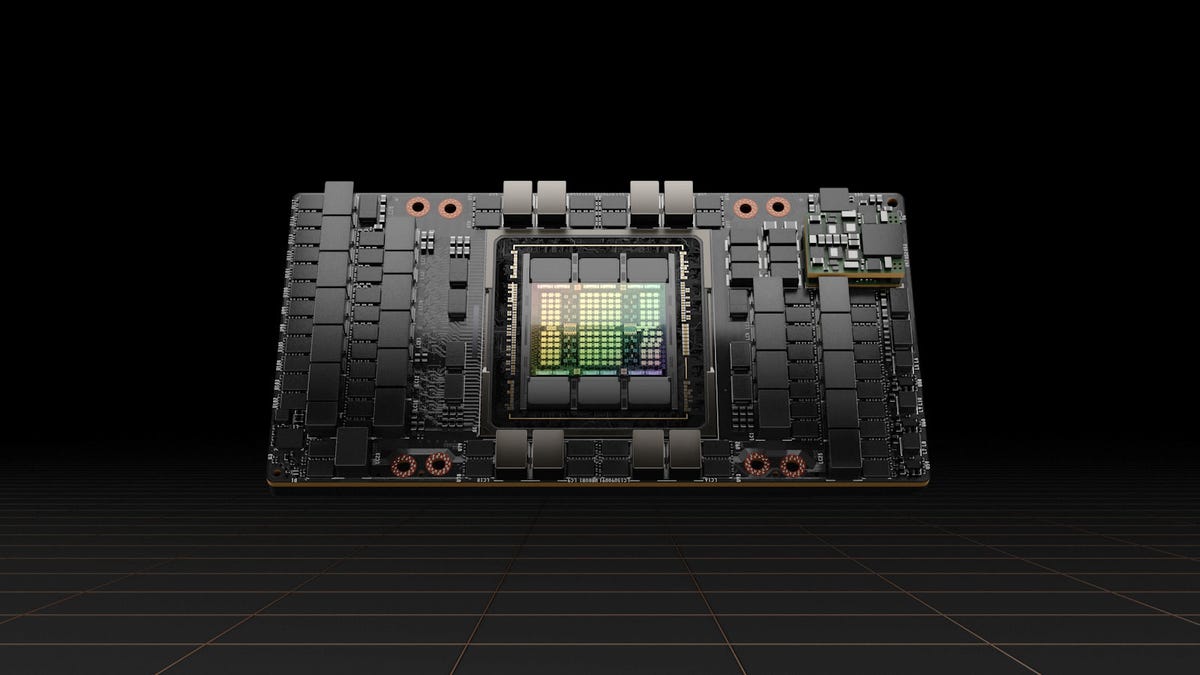

Nvidia's Hopper architecture, debuting in its H100 chip, speeds up graphics, scientific calculations and artificial intelligence.

Unless you're a gamer eager for the fastest graphics, you might not have the faintest idea of what chip designer Nvidia does. But its processors, including a host of new models announced Tuesday at its GTC event, are the brains in a broadening collection of digital products like AI services, autonomous vehicles and the nascent metaverse.

At the show, Nvidia announced its new H100 AI processor, a successor to the A100 that's used today for training artificial intelligence systems to do things like translate human speech, recognize what's in photos and plot a self-driving car's route through traffic.

It's the highest-end member of the family of graphics processing units that propelled Nvidia to business success. With the company's NVLink high-speed communication pathway, customers can also link as many as 256 H100 chips to each other into "essentially one mind-blowing GPU," Chief Executive Jensen Huang said at the online conference.

The A100 is based on a design called Hopper, and it can be paired with Nvidia's new central processing unit, called Grace, Nvidia said at its GPU Technology Conference. The names are an homage to computing pioneer Grace Hopper, who worked on some of the world's earliest computers, invented the crucial programming tool called the compiler, codeveloped the COBOL programming language and coined the term "bug."

With those and other GTC announcements, Nvidia has a broad collection of processor designs touching many parts of our digital lives. Nvidia may not be as well known as Intel or Apple when it comes to chips, but the Silicon Valley company is just as important in making next-gen technology practical.

Nvidia also announced the RTX A5500, a new member of its Ampere series of graphics chips for professionals who need graphics power for 3D tasks like animation, product design and visual data processing. That dovetails with Nvidia's expanded Omniverse efforts to sell the tools and cloud computing services needed to build the 3D realms called the metaverse.

The H100 GPUs will ship in the third quarter, and Grace is "on track to ship next year," Huang said.

There's plenty of competition for the H100, which is composed of a whopping 80 billion transistors that make up its data processing circuitry and is built by Taiwan Semiconductor Manufacturing Co. (TSMC). Rivals include Intel's upcoming Ponte Vecchio processor, with more than 100 billion transistors, and a host of special-purpose AI accelerator chips from startups like Graphcore, SambaNova Systems and Cerebras.

Nvidia also packages the H100 into its DGX computing modules that can be interlinked into vast systems called SuperPods. One DGX customer for earlier machines is Meta, formerly Facebook, in its Research SuperCluster, the fastest machine in the world at AI computing by the company's estimates. Nvidia expects to outpace it with its own DGX SuperPod system, Eos, the company said.

One of Nvidia's highest-profile competitors is Tesla, whose D1 chip powers its Dojo technology to train autonomous vehicles. Dojo, when up and running, will displace Nvidia chips, the carmaker said. Tesla also has designed its own processors for running those AI models in the cars themselves.

Nvidia is making progress, too. It announced that two electric vehicle makers, Lucid and China-based BYD, are using its Orin AI chips for cars. It also announced it's working on a new generation of its Hyperion car chip family, due to arrive in 2026. Nvidia expects $11 billion from car chips over the next six years, up from $8 billion last year.

More uncertain is how well Nvidia will profit from the metaverse. Its Omniverse effort spans several different aspects of work that's related, including collaborative 3D design work from through the cloud and digital twins that mirror parts of the real world inside a computing system.