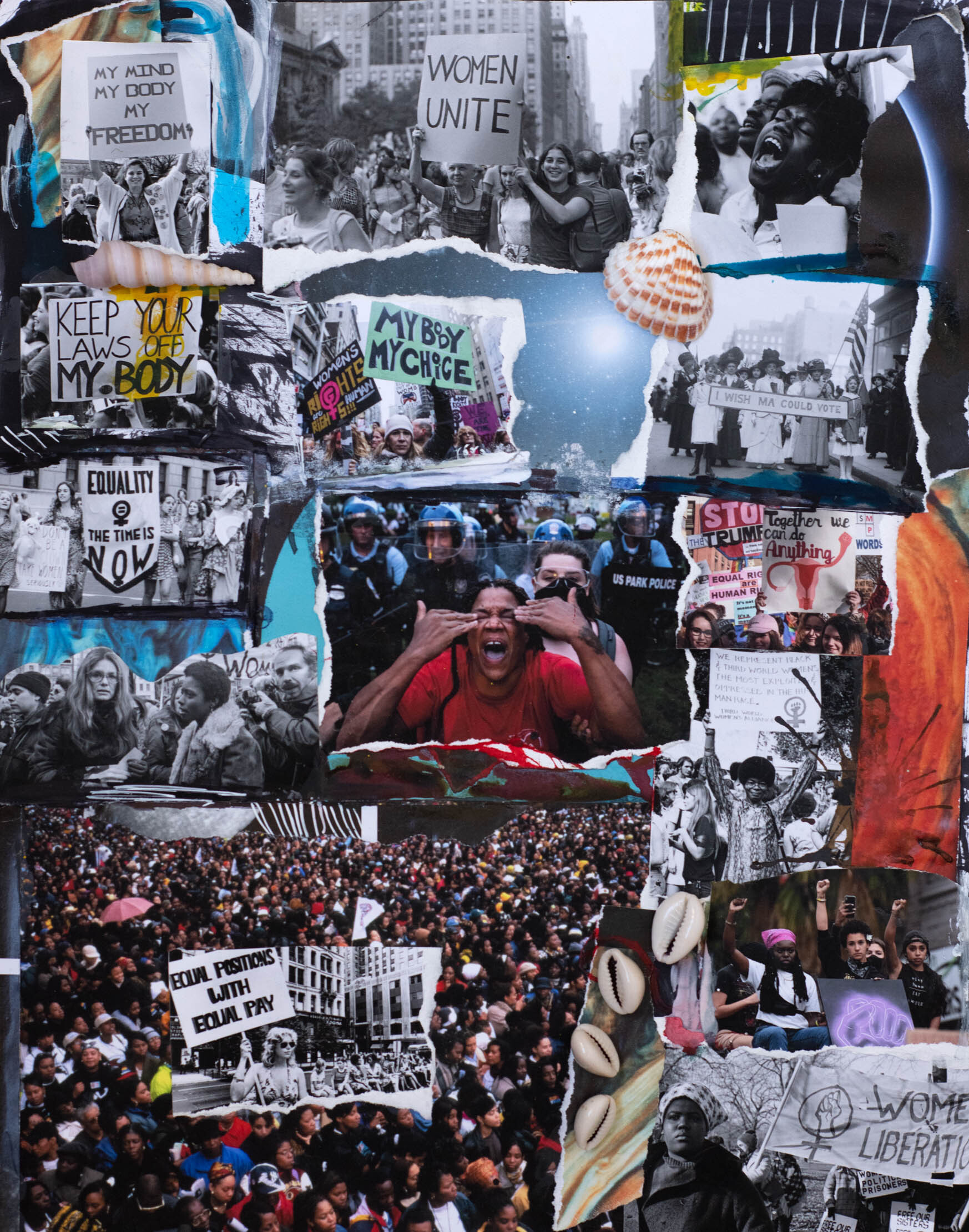

Photo Illustration: Delphine Diallo; Photos: Getty

It’s Getting Harder to Be a Woman in America

The US welcomes the employment and economic advancement of women—yet doesn’t actually support them. We’ve finally hit a breaking point.

When Roe v. Wade was overturned, I didn’t know about it for an hour. The verdict in Dobbs v. Jackson Women’s Health Organization came down around 10 a.m. EDT on a Friday in late June, while I was interviewing a woman who’d given birth in a hospital hallway because all of its delivery rooms were full. After we hung up, I had about 60 missed text messages from friends. I read the texts. Then the news. And then I went back to work. At the time, I was 24 weeks pregnant.

In the weeks since, I’ve found myself dwelling not on the immediate effects of the decision and what it means for my 2-year-old daughter’s future or even my own pregnancy, but on all the ways this country has shunted onto women the responsibility of keeping its society and economy running. I’ll fold my toddler’s onesies and think about how the US, a country in which two-thirds of mothers of young children work, has such a forceful “pro-life” movement, and yet it’s the only wealthy nation that doesn’t guarantee new moms time off work after they have a baby.