'Horrible experiment' appears to show that Twitter's cropping tool is racially biased

A programmer who carried out a "horrible experiment" believes Twitter's automated tool favours the faces of white people.

Tuesday 22 September 2020 05:55, UK

Twitter has launched an investigation after users claimed that its image cropping feature favours the faces of white people.

An automatic tool on the social network's mobile app automatically crops pictures that are too big to fit on the screen - and selects which parts of an image should be cut off.

But an experiment from a graduate programmer appeared to show racial bias.

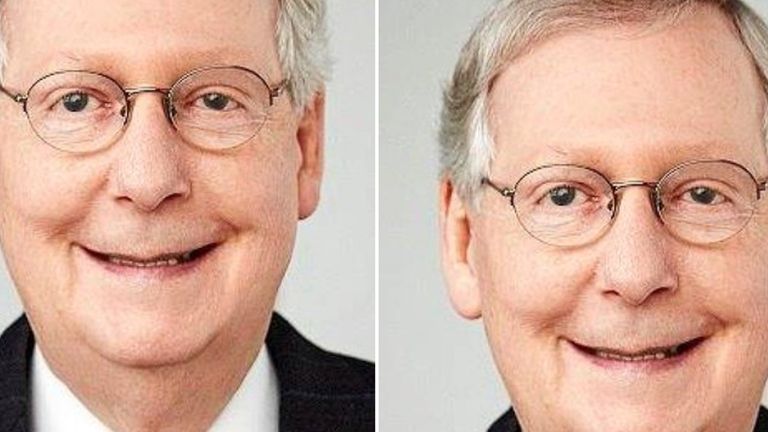

To see what Twitter's algorithm would pick, Tony Arcieri posted a long image featuring headshots of Senate Republican leader Mitch McConnell at the top and former US president Barack Obama at the bottom - separated by white space.

In a second image, Mr Obama's headshot was placed at the top, with Mr McConnell's at the bottom.

Both times, the former president was cropped out altogether.

Following the "horrible experiment" - which came after an image he posted cropped out a black colleague - Mr Arcieri wrote: "Twitter is just one example of racism manifesting in machine learning algorithms."

At the time of writing, his experiment has been retweeted 78,000 times.

Twitter has vowed to look into the issue, but said in a statement: "Our team did test for bias before shipping the model and did not find evidence of racial or gender bias in our testing.

"It's clear from these examples that we've got more analysis to do. We'll continue to share what we learn, what actions we take, and will open source our analysis so others can review and replicate."

A Twitter representative also pointed to research from a Carnegie Mellon University scientist who analysed 92 images. In that experiment, the algorithm favoured black faces 52 times.

Back in 2018, the company said the tool was based on a "neural network" that uses artificial intelligence to predict which part of a photo would be interesting to a user.

Meredith Whittaker, the co-founder of the AI Now Institute, told the Thomson Reuters Foundation: "This is another in a long and weary litany of examples that show automated systems encoding racism, misogyny and histories of discrimination."